Outraged by a failed attempt to acquire European carmaker Ferrari, Henry Ford II sets out to trounce Enzo Ferrari on his own playing field – automobile endurance racing. Unfortunately, the effort falls short, leading Ford to turn to independent car designer, Carroll Shelby. But Shelby’s outspoken lead test driver, Ken Miles, complicates the situation by making an enemy out of Ford Senior VP Leo Beebe. Nevertheless, Shelby and his team are able to build one of the greatest race cars ever – the GT40 MkII – setting the showdown between the two auto legends at the 1966 24 Hours of Le Mans. Matt Damon and Christian Bale star as Shelby and Miles.

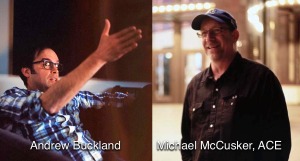

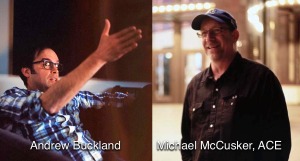

The challenge of bringing this clash of personalities to the screen was taken on by director James Mangold (Logan, Wolverine, 3:10 to Yuma) and his team of long time collaborators. I recently spoke with film editors Michael McCusker, ACE (Walk the Line, 3:10 to Yuma, Logan) and Andrew Buckland (The Girl on the Train) about what it took to bring Ford v Ferrari together.

_____________________________________________

[OP] The post team for this film has worked with James Mangold on quite a few films. Tell me a bit about the relationship.

[MM] I cut my very first movie, Walk The Line, for Jim 15 years ago and have since cut his last six movies. I was the first assistant editor on Kate & Leopold, which was shot in New York in 2001. That’s where I met Andrew, who was hired as one of the local New York film assistants. We became fast friends. Andrew moved out to LA in 2009 and I hired him to assist me on Knight & Day. We’ve been working together for 10 years now.

[MM] I cut my very first movie, Walk The Line, for Jim 15 years ago and have since cut his last six movies. I was the first assistant editor on Kate & Leopold, which was shot in New York in 2001. That’s where I met Andrew, who was hired as one of the local New York film assistants. We became fast friends. Andrew moved out to LA in 2009 and I hired him to assist me on Knight & Day. We’ve been working together for 10 years now.

I always want to keep myself available for Jim, because he chooses good material, attracts great talent, and is a filmmaker with a strong vision who works across multiple genres. Since I’ve worked with him, I’ve cut a musical movie, a western, a rom-com, an action movie, a straight-up superhero movie, a dystopian superhero movie, and now a car racing film.

[OP] As a film editor, it must be great not to get type-cast for any particular cutting style.

[MM] Exactly. I worked for David Brenner for years as his first. He was able to cross genres and that’s what I wanted to do. I knew even then that the most important decisions I would make would be choosing projects. I couldn’t have foreseen that Jim was going to work across all these genres – I simply knew that we worked well together and that the end product was good.

[OP] In preparing for Ford v Ferrari, did you study any other recent racing films, like Ron Howard’s Rush?

[MM] I saw that movie and liked it. Jim was aware of it, too, but I think he wanted to do something a little more organic. We watched a lot of older racing films, like Steve McQueen’s Le Mans and Frankenheimer’s Grand Prix. Jim’s original intention was to play the racing in long takes and bring the audience along for the ride. As he was developing the script and we were in preproduction, it became clear that there was so much more drama that was available for him to portray during the racing sequences than he anticipated. And so, the races took on more of an energized pace.

[OP] Energized in what way? Do you mean in how you cut it or in a change of production technique, like more stunt cameras and angles?

[MM] I was fortunate to get involved about two-and-a-half months prior to the start of production. We were developing the Le Mans race in pre-vis, which required a lot of editing and discussions about shot design and figuring out what the intercutting was going to be during that sequence, which is like the fourth act of the movie. You’re dealing with Mollie and Peter [Ken Miles’ wife and son] at home watching the race, the pit drama, what’s going on with Shelby and his crew, with Ford and Leo Beebe, and also, of course, what’s going on in the car with Ken. It’s a three act movie unto itself, so Jim was trying to figure out how it was all going to work, before he had to shoot it. That’s where I came in. The frenetic pace of Le Mans was more a part of the writing process – and part of the writing process was the pre-vis. The trick was how to make sure we weren’t just following cars around a track. That’s where redundancy can tend to beleaguer an audience in racing movies.

[OP] What was the timeline for production and post?

[MM] I started at the end of May 2018. Production began at the the beginning of August and went all the way through to the end of November. We started post in earnest at the beginning of November of last year, took some time off for the holidays, and then showed the film to the studios around February or March.

The challenge was that there was going to be a lot of racing footage, which meant there was going to be a LOT of footage. I knew I was going to need a strong co-editor, so Andrew was the natural choice. He had been cutting on his own and cutting with me over the years. We share a common approach to editing and have a similar aesthetic. There was a point when things got really intense and we needed another pair of hands, so I brought in Dirk Westervelt to help out for a couple of months. That kept our noses above water, but the process was really enjoyable. We were never in a crisis mode. We got a great response from preview audiences and, of course, that calms everybody down. At that point it was just about quality control and making sure we weren’t resting on our laurels.

[OP] How long was your initial cut and what was your process for trimming the film down to the present run time?

[MM] We’re at 2:30:00 right now and I think the first cut was 3:10:00 or 3:12:00. The Le Mans section was longer. The front end of the movie had more scenes in it. We ended up lifting some scenes and rearranging others. Plus, the basic trimming of scenes brought the length down. But nothing was the result of a panic, like, “Oh my God, we’ve got to get to 2:30:00!” There were no demands by the studio or any pressures we placed upon ourselves to hit a particular running time. I like to say that there’s real time and there’s cinematic time. You can watch Once Upon a Time in America, which is 3:45:00, and feel likes it’s an hour. Or you can watch an 89-minute movie and feel like it’s drudgery. We just wanted to make sure we weren’t overstaying our welcome.

[MM] We’re at 2:30:00 right now and I think the first cut was 3:10:00 or 3:12:00. The Le Mans section was longer. The front end of the movie had more scenes in it. We ended up lifting some scenes and rearranging others. Plus, the basic trimming of scenes brought the length down. But nothing was the result of a panic, like, “Oh my God, we’ve got to get to 2:30:00!” There were no demands by the studio or any pressures we placed upon ourselves to hit a particular running time. I like to say that there’s real time and there’s cinematic time. You can watch Once Upon a Time in America, which is 3:45:00, and feel likes it’s an hour. Or you can watch an 89-minute movie and feel like it’s drudgery. We just wanted to make sure we weren’t overstaying our welcome.

[OP] How extensively did you re-arrange scenes during the edit? Or did the structure of the film stay pretty much as scripted?

[MM] To a great degree it stayed as scripted. We had some scenes in the beginning that we felt were a little bit tangential and weren’t serving the narrative directly and those were cut. The real endeavor of this movie starts the moment that these two guys [Shelby and Miles] decide to tackle the challenge of developing this car. There’s a scene where Miles sees the car for the first time at LAX. We understood that we had to get to that point in a very efficient way, but also set up all the other characters – their motives and their desires.

It’s an interesting movie, because it starts off with a lot of characters. But then it develops into a movie about two guys and their friendship. So it goes from an ensemble piece to being about Ken and Carroll, while at the same time the scope of the movie is opening up and becoming larger as the racing is going on. For us, the trickiest part was the front end – to make sure we spent enough time with each character so that we understood them, but not so much time that audience would go, “Enough already! Get on with it!”

[OP] Were you both racing fans before you signed onto this film?

[AB] I was not.

[MM] When I was a kid, I watched a lot of racing. I liked CART racing – open wheel racing – not so much stock car racing. As I grew older, I lost interest, particularly when CART disbanded and NASCAR took over. So, I had an appreciation for it. I went to races, like the old Ontario 500 here in California.

[OP] Did that help inform your cutting style for this film?

[MM] I don’t think so. Where it helped was knowing the sound of the broadcasters and race announcers. I liked Chris Economaki and Jim McKay – guys who were broadcasting the races when I was a kid. I was intrigued about how they gave us the narrative of the race. It came in handy while we were making this movie, because we were able to get our hands on some of Jim McKay’s actual coverage of Le Mans and used it in the movie. That brings so much authenticity.

[MM] I don’t think so. Where it helped was knowing the sound of the broadcasters and race announcers. I liked Chris Economaki and Jim McKay – guys who were broadcasting the races when I was a kid. I was intrigued about how they gave us the narrative of the race. It came in handy while we were making this movie, because we were able to get our hands on some of Jim McKay’s actual coverage of Le Mans and used it in the movie. That brings so much authenticity.

[OP] Let’s dive deeper into the sound for this film. I would imagine that sound design was integral to your rough cuts. How did you tackle that?

[AB] We were fortunate to have the sound team on very early during preproduction. We were cutting in a 5.1 environment, so we wanted to create sound design early in the process. The sounds may have not been the exact engine sounds that would end up in the final, but they were adequate to allow you to experience the scenes as intended and to give the right feel. Because we needed to get Jim’s response early, some of the races were cut with the production sound – from the live mics during filming. This allowed us and Jim to quickly see how the scenes would flow. Other scenes were cut strictly MOS, because the sound design would have been way too complicated for the initial cut of the scene. Once the scene was cut visually, we’d hand over the scene to Don [Sylvester, sound supervisor] who was able to provide us with a set of 5.1 stems. That was great, because we could recut and repurpose those stems for other races.

[MM] We had developed a strategy with Don to split the sound design into four or five stems to give us enough discrete channels to recut these sequences. The stems were a palette of interior perspectives, exterior perspectives, crowds, car-bys, and so on. By employing this strategy, we didn’t need to continually turn over the cut to sound for patch-up work. Then, as Don went out and recorded the real cars and was developing the actual sounds for what was going to be used in the mix, he’d generate new stems and we would put them into the Avid. This was extremely informative to Jim, because he could experience our Avid temp mix in 5.1 and give notes, which ultimately informed the final sound design and the mix.

[MM] We had developed a strategy with Don to split the sound design into four or five stems to give us enough discrete channels to recut these sequences. The stems were a palette of interior perspectives, exterior perspectives, crowds, car-bys, and so on. By employing this strategy, we didn’t need to continually turn over the cut to sound for patch-up work. Then, as Don went out and recorded the real cars and was developing the actual sounds for what was going to be used in the mix, he’d generate new stems and we would put them into the Avid. This was extremely informative to Jim, because he could experience our Avid temp mix in 5.1 and give notes, which ultimately informed the final sound design and the mix.

[OP] What about temp music? Did you also weave that into your rough cuts?

[MM] Ted Caplan, our music editor, has also worked with Jim for 15 years. He’s a bit of a renaissance man – a screenwriter, a novelist, a one-time musician, and a sound designer in his own right. When he sits down to work with music, he’s coming at it from a story point-of-view. He has a very instinctual knowledge of where music should start and it happens to dovetail into the aesthetic that Jim, Andrew, and I are working towards. None of us like music to lead scenes in a way that anticipates what the scene is going to be about before you experience it.

Specifically, for this movie, it was challenging to develop what the musical tone of the movie would be. Ted was developing the temp track along with us from a very early stage. We found over time that not one particular musical style was going to work. Which is to say that this is a very complex score. It includes a kind of surf rock sound with Carroll Shelby in LA; an almost jaunty, lounge jazz sound for Detroit and the Ford executives; and then the hard-driving rhythmic sound for the racing.

(The final score was composed by Marco Beltrami and Buck Sanders.)

[OP] I presume you were housed in multiple cutting rooms at a central facility. Right?

[MM] We cut at 20th Century Fox, where Jim has a large office space. We cut Logan and Wolverine there before this movie. It has several cutting spaces, I was situated between Andrew and Don. Ted was next to Don and John Berri, our additional editor, and assistants were right around the corner. It makes for a very efficient working environment.

[MM] We cut at 20th Century Fox, where Jim has a large office space. We cut Logan and Wolverine there before this movie. It has several cutting spaces, I was situated between Andrew and Don. Ted was next to Don and John Berri, our additional editor, and assistants were right around the corner. It makes for a very efficient working environment.

[OP] Since the team was cutting with Avid Media Composer, did any of its features stand out to you for this film?

[Both] FluidMorph! (laughs)

[MM] FluidMorph, speed-ramping – we often had to manipulate the shot speeds to communicate the speed of the cars. A lot of these cars were kit cars that could drive safely at a certain speed for photography, but not at race speed. So we had to manipulate the speed a lot to get the sense of action that these cars have.

[OP] What about Avid’s Script Integration feature, often referred to as ScriptSync? I know a lot of narrative editors love it.

[MM] I used ScriptSync once a few years ago and I never cut a scene faster. I was so excited. Then I watched it and it was terrible. To me there’s so much more to editing than hitting the next line of dialogue. I’m more interested in the lines between the lines – subtext. I found that with ScriptSync I could put the scene together quickly, but it was flat as a pancake. I do understand the value of it in certain applications. For instance, I think it’s great on straight comedy. It’s helpful to get around and find things when you are shooting tons of coverage for a particular joke. But for me, it’s not something I lean on. I mark up my own dailies and find stuff that way.

[OP] Tell me a bit more about your organizational process. Do you start with a KEM roll or stringouts of selected takes?

[MM] I don’t watch dailies, which sounds weird. By that I mean, I don’t watch them in a traditional sense. I don’t start in the morning, watch the dailies, and then start cutting. And I don’t ask my assistants to organize any of my dailies in bins. I come in and grab the scene that I have in front on me. I’ll look at the last take of every set-up really quickly and then I spend an enormous amount of time – particularly on complex scenes – creating a bin structure that I can work with. Sometimes it’s the beats in a scene, sometimes I organize by shot size, sometimes by character – it depends on what’s driving the scene. That’s the way I learn my footage – by organizing it. I remember shot sizes. I remember what was shot from set-up to set-up. I have a strong visual memory of where things are in a bin. So, if I ask an assistant to do that, then I’m not going to remember it. If I do it myself, then I’ll remember it. If there are a lot of resets or restarts in a take, I’ll have the assistant mark those up. But, I’ll go through and mark up beats or pivotal points in a scene, or particularly beautiful moments. And then I’ll start cutting.

[MM] I don’t watch dailies, which sounds weird. By that I mean, I don’t watch them in a traditional sense. I don’t start in the morning, watch the dailies, and then start cutting. And I don’t ask my assistants to organize any of my dailies in bins. I come in and grab the scene that I have in front on me. I’ll look at the last take of every set-up really quickly and then I spend an enormous amount of time – particularly on complex scenes – creating a bin structure that I can work with. Sometimes it’s the beats in a scene, sometimes I organize by shot size, sometimes by character – it depends on what’s driving the scene. That’s the way I learn my footage – by organizing it. I remember shot sizes. I remember what was shot from set-up to set-up. I have a strong visual memory of where things are in a bin. So, if I ask an assistant to do that, then I’m not going to remember it. If I do it myself, then I’ll remember it. If there are a lot of resets or restarts in a take, I’ll have the assistant mark those up. But, I’ll go through and mark up beats or pivotal points in a scene, or particularly beautiful moments. And then I’ll start cutting.

[AB] I’ve adopted a lot of Mike’s methodology, mainly because I assisted Mike on a few films. But it actually works for me, as well. I have a similar aesthetic to Mike. I’ve used ScriptSync before and I tend to agree that it discourages you from seeing – as Mike described – the moments between lines. Those moments are valuable to remember.

[OP] I presume this film was shot digitally. Right?

[MM] It was primarily shot with [ARRI] Alexa 65 LF cameras, plus some other small format cameras. A lot of it was shot with old anamorphic lenses on the Alexa that allowed them to give it a bit of a vintage feeling. It’s interesting that as you watch it, you see the effect of the old lenses. There’s a fall-off on the edges, which is kind of cool. There were a couple of places where the subject matter was framed into the curve of the lens, which affects the focus. But we stuck with it, because it feels ‘of the time.’

[OP] Since the film takes place in the 1960s and with racing action sequences, I presume there were quite a few visual effects to properly place the film in time. Right?

[MM] There’s a ton of that. The whole movie is a period film. We could temp certain things in the Avid for the rough cuts. John Berri was wrangling visual effects. He’s a master in the Avid, but also Adobe After Effects. He has some clever ways of filling in backgrounds or green screens with temp elements to give the director an idea of what’s going to go there. We try to do as much temp work in the Avid as we are capable of doing, but there’s so much 3D visual effects work in this movie that we weren’t able to do that all of the time.

[MM] There’s a ton of that. The whole movie is a period film. We could temp certain things in the Avid for the rough cuts. John Berri was wrangling visual effects. He’s a master in the Avid, but also Adobe After Effects. He has some clever ways of filling in backgrounds or green screens with temp elements to give the director an idea of what’s going to go there. We try to do as much temp work in the Avid as we are capable of doing, but there’s so much 3D visual effects work in this movie that we weren’t able to do that all of the time.

The caveat, though, is that the racing is real. The cars are real. The visual effects work was for a lot of the backgrounds. The movie was shot almost entirely in Los Angeles with some second unit footage shot in Georgia. The current, modern day Le Mans track isn’t at all representative of what Le Mans was in 1966, so there was no way to shoot Le Mans. Everything had to be doubled and then augmented with visual effects. In addition to Georgia, where they shot most of the actual racing for Le Mans, they went for a week to France to get some shots of the actual town of Le Mans. Of those, I think only about four of those shots are left. (laughs)

[OP] Any final thoughts about how this film turned out?

[MM] I’m psyched that people seem to like the film. Our concern was that we had a lot of story to tell. Would we wear audiences out? We continually have people tell us, “That was two and a half hours? We had no idea.” That’s humbling for us and it’s a great feeling. It’s a movie about these really great characters with great scope and great racing. That goes back to the very advent of movies. You can put all the big visual effects in a film that you want to, but it’s really about people.

[MM] I’m psyched that people seem to like the film. Our concern was that we had a lot of story to tell. Would we wear audiences out? We continually have people tell us, “That was two and a half hours? We had no idea.” That’s humbling for us and it’s a great feeling. It’s a movie about these really great characters with great scope and great racing. That goes back to the very advent of movies. You can put all the big visual effects in a film that you want to, but it’s really about people.

[AB] I would absolutely agree. It’s more of a character movie with racing. Also, because I am not a ‘racing fan’ per se, the character drama really pulled me into the film while working on it.

[MM] It’s classic Hollywood cinema. I feel proud to be part of a movie that does what Hollywood does best.

The article is also available at postPerspective.

For more, check out this interview with Steve Hullfish.

©2019 Oliver Peters